Ambari-Metrics Monitor 启动失败rocky8

Ambari-Metrics Monitor 启动失败rocky8

# 一、问题现象与环境说明

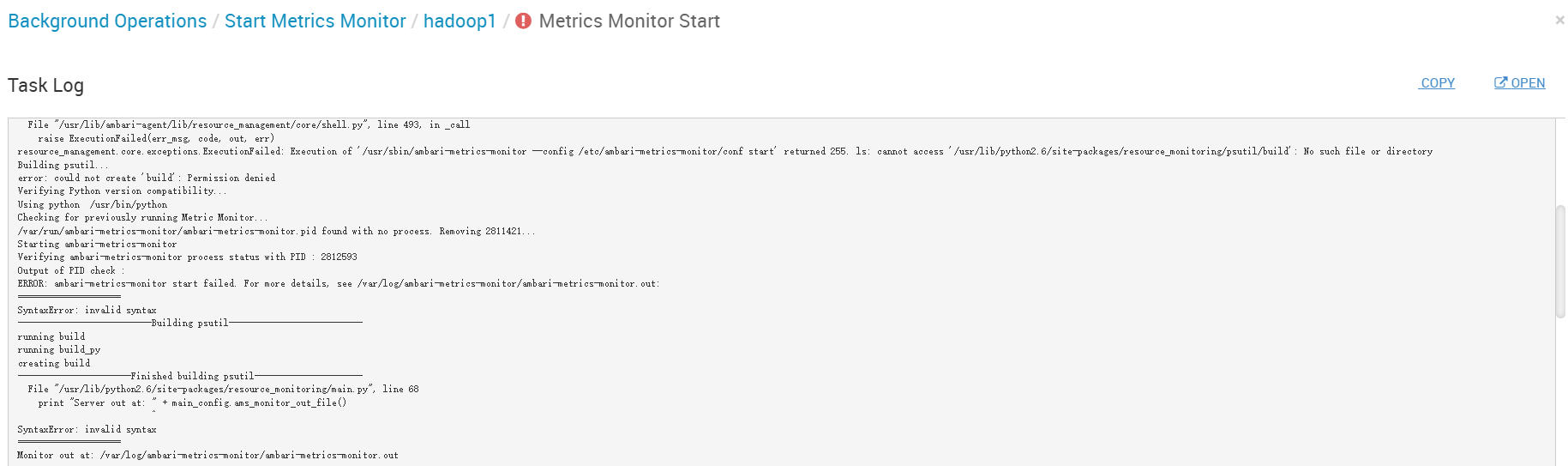

现象

Ambari-Metrics Monitor 启动失败,退出码 255;日志中同时出现 psutil 构建失败 与 Python 语法报错:

resource_management.core.exceptions.ExecutionFailed: Execution of '/usr/sbin/ambari-metrics-monitor --config /etc/ambari-metrics-monitor/conf start' returned 255. ls: cannot access '/usr/lib/python2.6/site-packages/resource_monitoring/psutil/build': No such file or directory

Building psutil...

error: could not create 'build': Permission denied

...

SyntaxError: invalid syntax

File "/usr/lib/python2.6/site-packages/resource_monitoring/main.py", line 68

print "Server out at: " + main_config.ams_monitor_out_file()

^

SyntaxError: invalid syntax

1

2

3

4

5

6

7

8

9

2

3

4

5

6

7

8

9

运行环境:Rocky Linux 8 / Stack 3.2.0 / 默认 Python3.9(系统)

进程用户:ams:hadoop(由 Ambari 管理)

# 二、快速定位结论

| 维度 | 现象 | 根因 | 结论 |

|---|---|---|---|

| Python 版本 | SyntaxError: print "..." | 这是 Python2 语法,被 Python3 解释器执行 | Monitor 组件依赖 Python2,但当前默认 python 指向 Python3 |

| psutil 构建 | Permission denied 于 /usr/lib/python2.6/.../psutil/build | 以 ams 用户写入系统级 site-packages,且目标目录(2.6)异常 | site-packages 版本路径不匹配(应该是 2.7),同时存在目录权限问题 |

| 目录版本 | 路径固定为 python2.6 | 老版本脚本中硬编码或变量拼装指向 2.6 | 需做目录适配(2.6 ↔ 2.7 映射)以兼容 |

结论

这是一个双重问题: 1)Monitor 的 Python 代码以 Python2 编写,却在 Python3 环境下执行; 2)psutil 需要在 Python2 的站点目录构建,但路径与权限都不对。

# 三、修复步骤(按顺序执行)

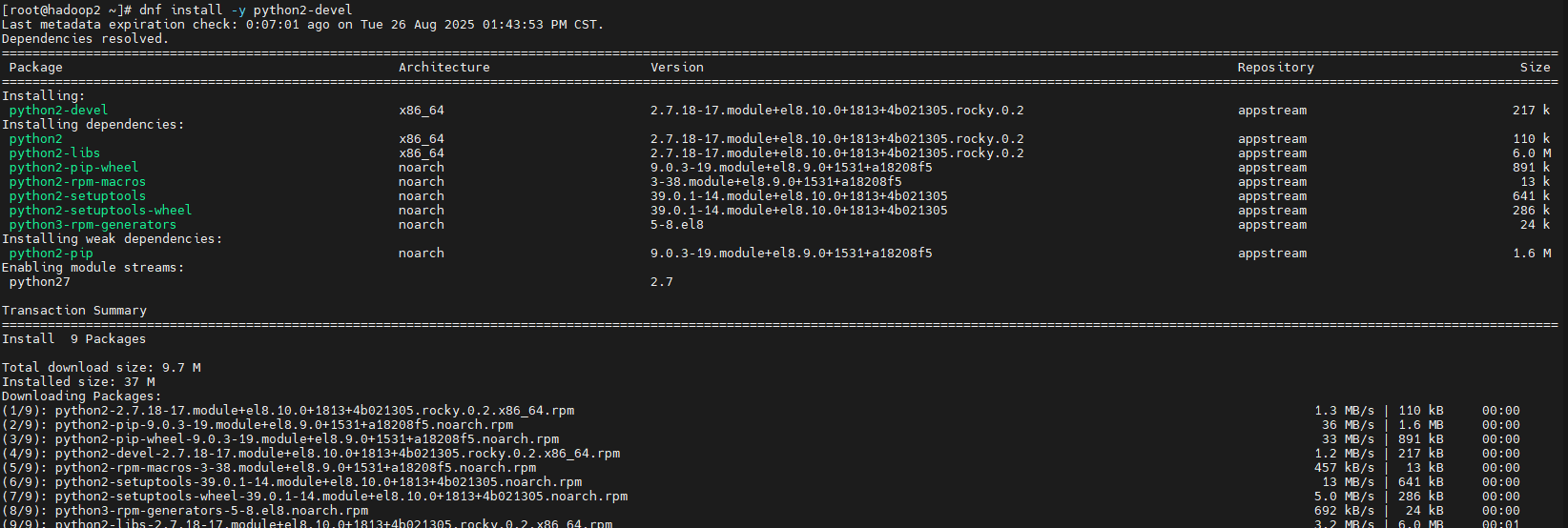

# 1、安装 Python2 与编译依赖

dnf install -y python2 python2-devel gcc gcc-c++ make

1

# 2、临时将系统默认 python 指向 Python2

Ambari Agent/Monitor 仍有 Python2 依赖,优先保证其可运行。 生产上可通过

alternatives管理;这里给出直观做法:

ln -sf /usr/bin/python2 /usr/bin/python

python --version # 确认为 Python 2.7.x

1

2

2

# 3、修正 site-packages 目录与权限

部分包在旧脚本中写死为

python2.6路径;Rocky8 的 python2 实际为 2.7。 这里做一次向后兼容映射,并保证ams可写入构建目录。

# 1)若不存在 2.6 目录,做个兼容软链到 2.7

[ -d /usr/lib/python2.6 ] || ln -s /usr/lib/python2.7 /usr/lib/python2.6

# 2)确保 ams 用户对该目录可写(仅限 Monitor 构建期,完毕后按需收紧)

chown -R ams:hadoop /usr/lib/python2.6

chmod -R 0755 /usr/lib/python2.6

1

2

3

4

5

6

2

3

4

5

6

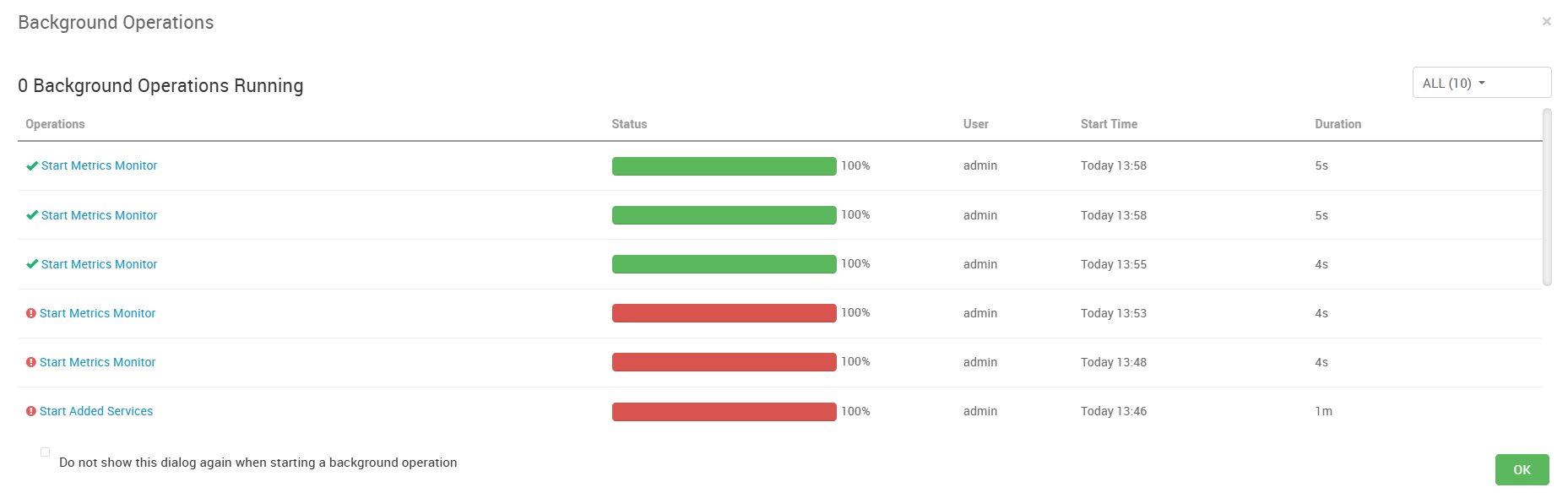

# 4、重试由 Ambari 拉起 Monitor

ambari-server restart # 如无必要,可只重启 Agent/组件

# 或在 Ambari Web 上对 Metrics Monitor 执行:重试/启动

1

2

2

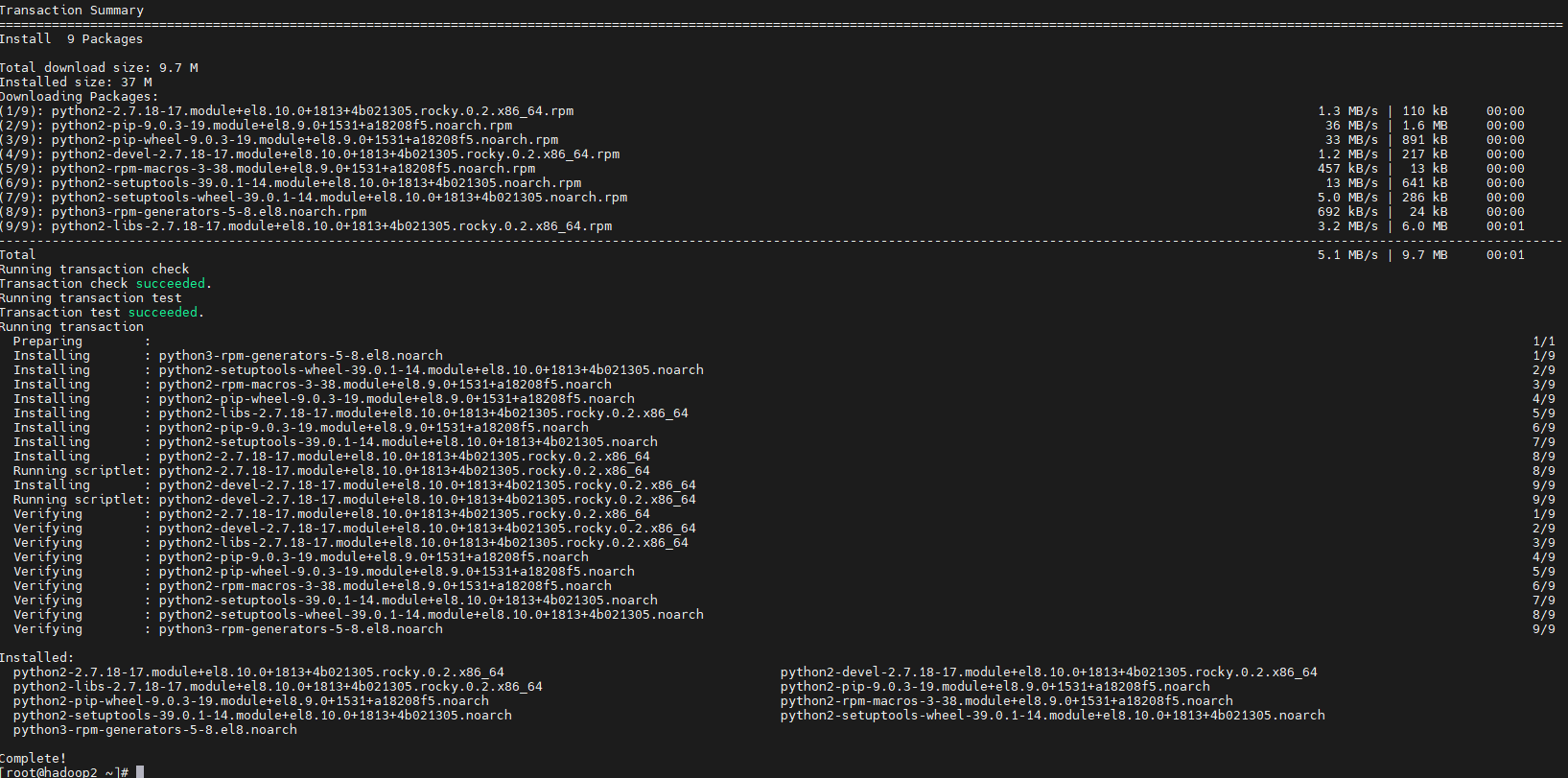

# 四、原始报错日志

stderr:

NoneType: None

The above exception was the cause of the following exception:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/AMBARI_METRICS/3.0.0/package/scripts/metrics_monitor.py", line 86, in <module>

AmsMonitor().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 413, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/AMBARI_METRICS/3.0.0/package/scripts/metrics_monitor.py", line 47, in start

ams_service("monitor", action="start")

File "/usr/lib/ambari-agent/lib/ambari_commons/os_family_impl.py", line 91, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/AMBARI_METRICS/3.0.0/package/scripts/ams_service.py", line 114, in ams_service

Execute(daemon_cmd, user=params.ams_user)

File "/usr/lib/ambari-agent/lib/resource_management/core/base.py", line 168, in __init__

self.env.run()

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 171, in run

self.run_action(resource, action)

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 137, in run_action

provider_action()

File "/usr/lib/ambari-agent/lib/resource_management/core/providers/system.py", line 350, in action_run

returns=self.resource.returns,

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 95, in inner

result = function(command, **kwargs)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 161, in checked_call

returns=returns,

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 278, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 493, in _call

raise ExecutionFailed(err_msg, code, out, err)

resource_management.core.exceptions.ExecutionFailed: Execution of '/usr/sbin/ambari-metrics-monitor --config /etc/ambari-metrics-monitor/conf start' returned 255. ls: cannot access '/usr/lib/python2.6/site-packages/resource_monitoring/psutil/build': No such file or directory

Building psutil...

error: could not create 'build': Permission denied

Verifying Python version compatibility...

Using python /usr/bin/python

Checking for previously running Metric Monitor...

/var/run/ambari-metrics-monitor/ambari-metrics-monitor.pid found with no process. Removing 2811421...

Starting ambari-metrics-monitor

Verifying ambari-metrics-monitor process status with PID : 2812593

Output of PID check :

ERROR: ambari-metrics-monitor start failed. For more details, see /var/log/ambari-metrics-monitor/ambari-metrics-monitor.out:

====================

SyntaxError: invalid syntax

--------------------------Building psutil--------------------------

running build

running build_py

creating build

----------------------Finished building psutil---------------------

File "/usr/lib/python2.6/site-packages/resource_monitoring/main.py", line 68

print "Server out at: " + main_config.ams_monitor_out_file()

^

SyntaxError: invalid syntax

====================

Monitor out at: /var/log/ambari-metrics-monitor/ambari-metrics-monitor.out

stdout:

2025-08-26 13:48:07,170 - Stack Feature Version Info: Cluster Stack=3.2.0, Command Stack=None, Command Version=3.2.0 -> 3.2.0

2025-08-26 13:48:07,188 - Using hadoop conf dir: /etc/hadoop/conf

2025-08-26 13:48:07,569 - Stack Feature Version Info: Cluster Stack=3.2.0, Command Stack=None, Command Version=3.2.0 -> 3.2.0

2025-08-26 13:48:07,571 - Using hadoop conf dir: /etc/hadoop/conf

2025-08-26 13:48:07,571 - Skipping param: datanode_max_locked_memory, due to Configuration parameter 'dfs.datanode.max.locked.memory' was not found in configurations dictionary!

2025-08-26 13:48:07,572 - Skipping param: dfs_ha_namenode_ids, due to Configuration parameter 'dfs.ha.namenodes' was not found in configurations dictionary!

2025-08-26 13:48:07,572 - Skipping param: falcon_user, due to Configuration parameter 'falcon-env' was not found in configurations dictionary!

2025-08-26 13:48:07,572 - Skipping param: gmetad_user, due to Configuration parameter 'ganglia-env' was not found in configurations dictionary!

2025-08-26 13:48:07,572 - Skipping param: gmond_user, due to Configuration parameter 'ganglia-env' was not found in configurations dictionary!

2025-08-26 13:48:07,572 - Skipping param: nfsgateway_heapsize, due to Configuration parameter 'nfsgateway_heapsize' was not found in configurations dictionary!

2025-08-26 13:48:07,572 - Skipping param: oozie_user, due to Configuration parameter 'oozie-env' was not found in configurations dictionary!

2025-08-26 13:48:07,572 - Skipping param: ranger_group, due to Configuration parameter 'ranger-env' was not found in configurations dictionary!

2025-08-26 13:48:07,572 - Skipping param: ranger_user, due to Configuration parameter 'ranger-env' was not found in configurations dictionary!

2025-08-26 13:48:07,572 - Skipping param: repo_info, due to Configuration parameter 'repoInfo' was not found in configurations dictionary!

2025-08-26 13:48:07,573 - Skipping param: tez_am_view_acls, due to Configuration parameter 'tez-site' was not found in configurations dictionary!

2025-08-26 13:48:07,573 - Skipping param: tez_user, due to Configuration parameter 'tez-env' was not found in configurations dictionary!

2025-08-26 13:48:07,573 - Skipping param: zeppelin_group, due to Configuration parameter 'zeppelin-env' was not found in configurations dictionary!

2025-08-26 13:48:07,573 - Skipping param: zeppelin_user, due to Configuration parameter 'zeppelin-env' was not found in configurations dictionary!

2025-08-26 13:48:07,573 - Group['hdfs'] {}

2025-08-26 13:48:07,574 - Group['hadoop'] {}

2025-08-26 13:48:07,574 - User['zookeeper'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-08-26 13:48:07,578 - User['ams'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-08-26 13:48:07,580 - User['ambari-qa'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-08-26 13:48:07,582 - User['hdfs'] {'uid': None, 'gid': 'hadoop', 'groups': ['hdfs', 'hadoop'], 'fetch_nonlocal_groups': True}

2025-08-26 13:48:07,583 - User['yarn'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-08-26 13:48:07,585 - User['mapred'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-08-26 13:48:07,587 - User['hbase'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-08-26 13:48:07,589 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0o555}

2025-08-26 13:48:07,590 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2025-08-26 13:48:07,598 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2025-08-26 13:48:07,598 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'mode': 0o775, 'create_parents': True, 'cd_access': 'a'}

2025-08-26 13:48:07,600 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0o555}

2025-08-26 13:48:07,600 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0o555}

2025-08-26 13:48:07,601 - call['/var/lib/ambari-agent/tmp/changeUid.sh hbase'] {}

2025-08-26 13:48:07,612 - call returned (0, '1017')

2025-08-26 13:48:07,613 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1017'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2025-08-26 13:48:07,621 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1017'] due to not_if

2025-08-26 13:48:07,623 - User['hdfs'] {'fetch_nonlocal_groups': True}

2025-08-26 13:48:07,625 - User['hdfs'] {'groups': ['hdfs', 'hadoop'], 'fetch_nonlocal_groups': True}

2025-08-26 13:48:07,627 - FS Type: HDFS

2025-08-26 13:48:07,627 - Directory['/etc/hadoop'] {'mode': 0o755}

2025-08-26 13:48:07,655 - File['/etc/hadoop/conf/hadoop-env.sh'] {'owner': 'hdfs', 'group': 'hadoop', 'content': InlineTemplate(...)}

2025-08-26 13:48:07,656 - Writing File['/etc/hadoop/conf/hadoop-env.sh'] because contents don't match

2025-08-26 13:48:07,657 - Changing owner for /tmp/tmp1756187287.6573842_513 from 0 to hdfs

2025-08-26 13:48:07,657 - Changing group for /tmp/tmp1756187287.6573842_513 from 0 to hadoop

2025-08-26 13:48:07,658 - Moving /tmp/tmp1756187287.6573842_513 to /etc/hadoop/conf/hadoop-env.sh

2025-08-26 13:48:07,664 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0o1777}

2025-08-26 13:48:07,698 - Skipping param: hdfs_user_keytab, due to Configuration parameter 'hdfs_user_keytab' was not found in configurations dictionary!

2025-08-26 13:48:07,700 - Execute[('setenforce', '0')] {'only_if': 'test -f /selinux/enforce', 'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True}

2025-08-26 13:48:07,710 - Skipping Execute[('setenforce', '0')] due to not_if

2025-08-26 13:48:07,710 - Directory['/var/log/hadoop'] {'create_parents': True, 'owner': 'root', 'group': 'hadoop', 'mode': 0o775, 'cd_access': 'a'}

2025-08-26 13:48:07,713 - Directory['/var/run/hadoop'] {'create_parents': True, 'owner': 'root', 'group': 'root', 'cd_access': 'a'}

2025-08-26 13:48:07,713 - Directory['/var/run/hadoop/hdfs'] {'owner': 'hdfs', 'cd_access': 'a'}

2025-08-26 13:48:07,714 - Directory['/tmp/hadoop-hdfs'] {'create_parents': True, 'owner': 'hdfs', 'cd_access': 'a'}

2025-08-26 13:48:07,719 - File['/etc/hadoop/conf/commons-logging.properties'] {'owner': 'hdfs', 'content': Template('commons-logging.properties.j2')}

2025-08-26 13:48:07,719 - Writing File['/etc/hadoop/conf/commons-logging.properties'] because contents don't match

2025-08-26 13:48:07,720 - Changing owner for /tmp/tmp1756187287.7201147_289 from 0 to hdfs

2025-08-26 13:48:07,720 - Moving /tmp/tmp1756187287.7201147_289 to /etc/hadoop/conf/commons-logging.properties

2025-08-26 13:48:07,726 - File['/etc/hadoop/conf/health_check'] {'owner': 'hdfs', 'content': Template('health_check.j2')}

2025-08-26 13:48:07,727 - Writing File['/etc/hadoop/conf/health_check'] because contents don't match

2025-08-26 13:48:07,727 - Changing owner for /tmp/tmp1756187287.7272584_414 from 0 to hdfs

2025-08-26 13:48:07,727 - Moving /tmp/tmp1756187287.7272584_414 to /etc/hadoop/conf/health_check

2025-08-26 13:48:07,741 - File['/etc/hadoop/conf/log4j.properties'] {'mode': 0o644, 'group': 'hadoop', 'owner': 'hdfs', 'content': InlineTemplate(...)}

2025-08-26 13:48:07,742 - Writing File['/etc/hadoop/conf/log4j.properties'] because contents don't match

2025-08-26 13:48:07,742 - Changing owner for /tmp/tmp1756187287.742593_745 from 0 to hdfs

2025-08-26 13:48:07,743 - Changing group for /tmp/tmp1756187287.742593_745 from 0 to hadoop

2025-08-26 13:48:07,743 - Moving /tmp/tmp1756187287.742593_745 to /etc/hadoop/conf/log4j.properties

2025-08-26 13:48:07,749 - File['/var/lib/ambari-agent/lib/fast-hdfs-resource.jar'] {'mode': 0o644, 'content': StaticFile('fast-hdfs-resource.jar')}

2025-08-26 13:48:07,786 - File['/etc/hadoop/conf/hadoop-metrics2.properties'] {'owner': 'hdfs', 'group': 'hadoop', 'content': InlineTemplate(...)}

2025-08-26 13:48:07,786 - Writing File['/etc/hadoop/conf/hadoop-metrics2.properties'] because contents don't match

2025-08-26 13:48:07,786 - Changing owner for /tmp/tmp1756187287.7866137_897 from 0 to hdfs

2025-08-26 13:48:07,786 - Changing group for /tmp/tmp1756187287.7866137_897 from 0 to hadoop

2025-08-26 13:48:07,787 - Moving /tmp/tmp1756187287.7866137_897 to /etc/hadoop/conf/hadoop-metrics2.properties

2025-08-26 13:48:07,791 - File['/etc/hadoop/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0o755}

2025-08-26 13:48:07,792 - File['/etc/hadoop/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2025-08-26 13:48:07,797 - File['/etc/hadoop/conf/topology_mappings.data'] {'content': Template('topology_mappings.data.j2'), 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0o644, 'only_if': 'test -d /etc/hadoop/conf'}

2025-08-26 13:48:07,802 - Writing File['/etc/hadoop/conf/topology_mappings.data'] because contents don't match

2025-08-26 13:48:07,802 - Changing owner for /tmp/tmp1756187287.8024964_210 from 0 to hdfs

2025-08-26 13:48:07,802 - Changing group for /tmp/tmp1756187287.8024964_210 from 0 to hadoop

2025-08-26 13:48:07,803 - Moving /tmp/tmp1756187287.8024964_210 to /etc/hadoop/conf/topology_mappings.data

2025-08-26 13:48:07,807 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'mode': 0o755, 'only_if': 'test -d /etc/hadoop/conf'}

2025-08-26 13:48:07,811 - Skipping unlimited key JCE policy check and setup since the Java VM is not managed by Ambari

2025-08-26 13:48:07,818 - Skipping stack-select on AMBARI_METRICS because it does not exist in the stack-select package structure.

2025-08-26 13:48:08,279 - Using hadoop conf dir: /etc/hadoop/conf

2025-08-26 13:48:08,281 - checked_call['hostid'] {}

2025-08-26 13:48:08,286 - checked_call returned (0, '000aa063')

2025-08-26 13:48:08,288 - Skipping param: hbase_drain_only, due to Configuration parameter 'mark_draining_only' was not found in configurations dictionary!

2025-08-26 13:48:08,289 - Skipping param: hbase_excluded_hosts, due to Configuration parameter 'excluded_hosts' was not found in configurations dictionary!

2025-08-26 13:48:08,289 - Skipping param: hbase_included_hosts, due to Configuration parameter 'included_hosts' was not found in configurations dictionary!

2025-08-26 13:48:08,289 - Skipping param: hdfs_principal_name, due to Configuration parameter 'hdfs_principal_name' was not found in configurations dictionary!

2025-08-26 13:48:08,289 - Skipping param: hdfs_user_keytab, due to Configuration parameter 'hdfs_user_keytab' was not found in configurations dictionary!

2025-08-26 13:48:08,290 - Directory['/etc/ambari-metrics-monitor/conf'] {'owner': 'ams', 'group': 'hadoop', 'create_parents': True}

2025-08-26 13:48:08,292 - Directory['/var/log/ambari-metrics-monitor'] {'owner': 'ams', 'group': 'hadoop', 'mode': 0o755, 'create_parents': True}

2025-08-26 13:48:08,293 - Execute['ambari-sudo.sh chown -R ams:hadoop /var/log/ambari-metrics-monitor'] {}

2025-08-26 13:48:08,300 - Directory['/var/run/ambari-metrics-monitor'] {'owner': 'ams', 'group': 'hadoop', 'cd_access': 'a', 'mode': 0o755, 'create_parents': True}

2025-08-26 13:48:08,302 - Directory['/usr/lib/python3.9/site-packages/resource_monitoring/psutil/build'] {'owner': 'ams', 'group': 'hadoop', 'cd_access': 'a', 'mode': 0o755, 'create_parents': True}

2025-08-26 13:48:08,302 - Execute['ambari-sudo.sh chown -R ams:hadoop /usr/lib/python3.9/site-packages/resource_monitoring'] {}

2025-08-26 13:48:08,311 - TemplateConfig['/etc/ambari-metrics-monitor/conf/metric_monitor.ini'] {'owner': 'ams', 'group': 'hadoop', 'template_tag': None}

2025-08-26 13:48:08,325 - File['/etc/ambari-metrics-monitor/conf/metric_monitor.ini'] {'owner': 'ams', 'group': 'hadoop', 'mode': None, 'content': Template('metric_monitor.ini.j2')}

2025-08-26 13:48:08,325 - Writing File['/etc/ambari-metrics-monitor/conf/metric_monitor.ini'] because contents don't match

2025-08-26 13:48:08,326 - Changing owner for /tmp/tmp1756187288.3263984_381 from 0 to ams

2025-08-26 13:48:08,326 - Changing group for /tmp/tmp1756187288.3263984_381 from 0 to hadoop

2025-08-26 13:48:08,327 - Moving /tmp/tmp1756187288.3263984_381 to /etc/ambari-metrics-monitor/conf/metric_monitor.ini

2025-08-26 13:48:08,332 - TemplateConfig['/etc/ambari-metrics-monitor/conf/metric_groups.conf'] {'owner': 'ams', 'group': 'hadoop', 'template_tag': None}

2025-08-26 13:48:08,334 - File['/etc/ambari-metrics-monitor/conf/metric_groups.conf'] {'owner': 'ams', 'group': 'hadoop', 'mode': None, 'content': Template('metric_groups.conf.j2')}

2025-08-26 13:48:08,334 - Writing File['/etc/ambari-metrics-monitor/conf/metric_groups.conf'] because contents don't match

2025-08-26 13:48:08,335 - Changing owner for /tmp/tmp1756187288.3348098_82 from 0 to ams

2025-08-26 13:48:08,335 - Changing group for /tmp/tmp1756187288.3348098_82 from 0 to hadoop

2025-08-26 13:48:08,335 - Moving /tmp/tmp1756187288.3348098_82 to /etc/ambari-metrics-monitor/conf/metric_groups.conf

2025-08-26 13:48:08,349 - File['/etc/ambari-metrics-monitor/conf/ams-env.sh'] {'owner': 'ams', 'content': InlineTemplate(...)}

2025-08-26 13:48:08,350 - Writing File['/etc/ambari-metrics-monitor/conf/ams-env.sh'] because contents don't match

2025-08-26 13:48:08,351 - Changing owner for /tmp/tmp1756187288.3508477_244 from 0 to ams

2025-08-26 13:48:08,351 - Moving /tmp/tmp1756187288.3508477_244 to /etc/ambari-metrics-monitor/conf/ams-env.sh

2025-08-26 13:48:08,357 - Directory['/etc/security/limits.d'] {'create_parents': True, 'owner': 'root', 'group': 'root'}

2025-08-26 13:48:08,360 - File['/etc/security/limits.d/ams.conf'] {'owner': 'root', 'group': 'root', 'mode': 0o644, 'content': Template('ams.conf.j2')}

2025-08-26 13:48:08,361 - Writing File['/etc/security/limits.d/ams.conf'] because contents don't match

2025-08-26 13:48:08,361 - Moving /tmp/tmp1756187288.3614178_963 to /etc/security/limits.d/ams.conf

2025-08-26 13:48:08,367 - Execute['/usr/sbin/ambari-metrics-monitor --config /etc/ambari-metrics-monitor/conf start'] {'user': 'ams'}

2025-08-26 13:48:10,889 - Execute['find /var/log/ambari-metrics-monitor -maxdepth 1 -type f -name '*' -exec echo '==> {} <==' \; -exec tail -n 40 {} \;'] {'logoutput': True, 'ignore_failures': True, 'user': 'ams'}

==> /var/log/ambari-metrics-monitor/ambari-metrics-monitor.out <==

--------------------------Building psutil--------------------------

running build

running build_py

creating build

----------------------Finished building psutil---------------------

File "/usr/lib/python2.6/site-packages/resource_monitoring/main.py", line 68

print "Server out at: " + main_config.ams_monitor_out_file()

^

SyntaxError: invalid syntax

--------------------------Building psutil--------------------------

running build

running build_py

creating build

----------------------Finished building psutil---------------------

File "/usr/lib/python2.6/site-packages/resource_monitoring/main.py", line 68

print "Server out at: " + main_config.ams_monitor_out_file()

^

SyntaxError: invalid syntax

2025-08-26 13:48:10,965 - Skipping stack-select on AMBARI_METRICS because it does not exist in the stack-select package structure.

Command failed after 1 tries

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

- 03

- Ranger Admin 证书快速导入脚本02-15