libmariadb.so.3 缺失导致 syncdb 失败el8系列报错

libmariadb.so.3 缺失导致 syncdb 失败el8系列报错

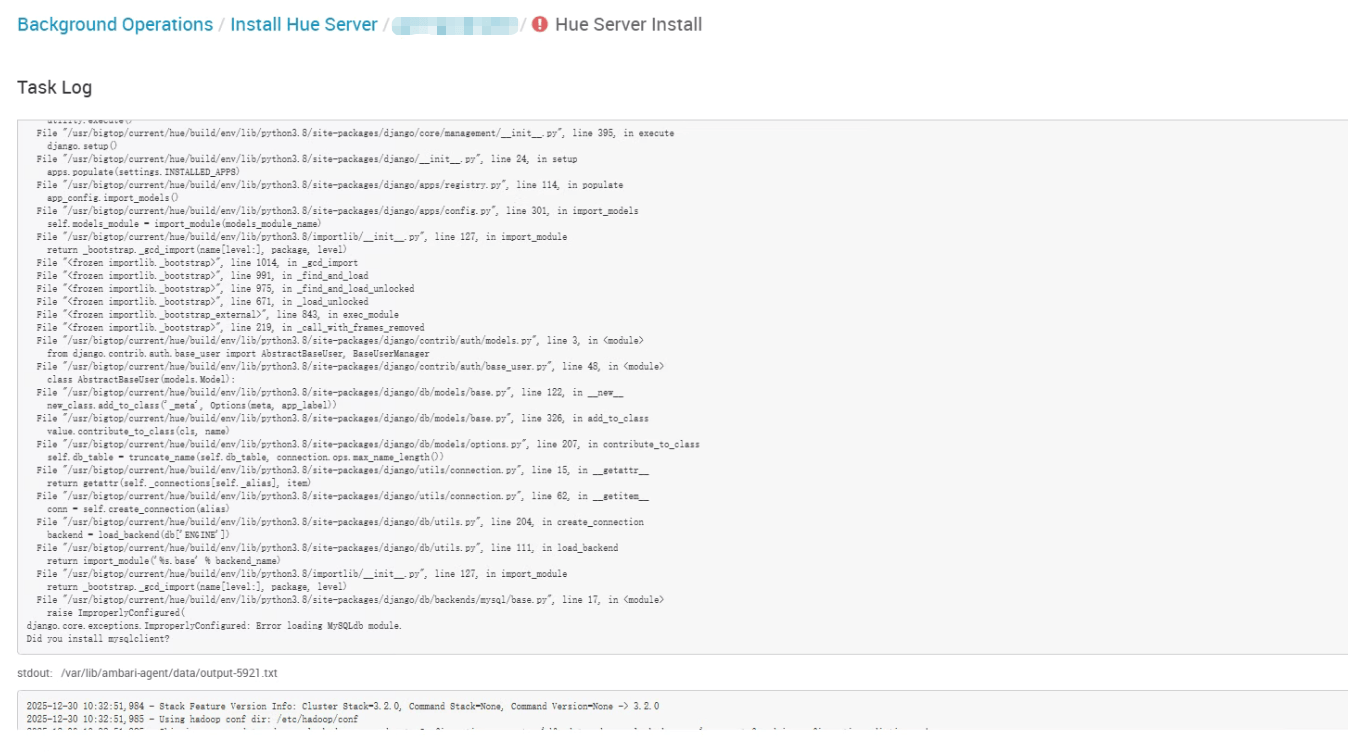

# 一、问题现象:Hue 安装阶段卡在 syncdb

在 Ambari 部署 Hue Server 的过程中,会执行一次数据库初始化操作:

- 执行命令:

hue syncdb --noinput - 执行环境:Hue Python 虚拟环境

- 作用:初始化 Django ORM 所需的元数据表

当该步骤失败时,Ambari 会直接判定 Hue 安装失败,组件无法进入 RUNNING 状态。

结论先行

该问题与 Kerberos 配置无关,也不是 Hue 参数错误,根因在于 系统层动态库缺失。

# 二、完整日志特征:错误链路较长,但关键非常集中

日志中会出现两段高度相关的异常信息:

- 第一层:

ImportError: libmariadb.so.3: cannot open shared object file - 第二层:Django 抛出

Did you install mysqlclient?

完整报错日志(原始保留)

stderr:

NoneType: None

The above exception was the cause of the following exception:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/BIGTOP/3.2.0/services/HUE/package/scripts/hue_server.py", line 90, in <module>

hue_server().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 413, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/BIGTOP/3.2.0/services/HUE/package/scripts/hue_server.py", line 41, in install

hue_service('hue_server', action='metastoresync', upgrade_type=None)

File "/var/lib/ambari-agent/cache/stacks/BIGTOP/3.2.0/services/HUE/package/scripts/hue_service.py", line 57, in hue_service

user=params.hue_user)

File "/usr/lib/ambari-agent/lib/resource_management/core/base.py", line 168, in __init__

self.env.run()

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 171, in run

self.run_action(resource, action)

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 137, in run_action

provider_action()

File "/usr/lib/ambari-agent/lib/resource_management/core/providers/system.py", line 350, in action_run

returns=self.resource.returns,

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 95, in inner

result = function(command, **kwargs)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 161, in checked_call

returns=returns,

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 278, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 493, in _call

raise ExecutionFailed(err_msg, code, out, err)

resource_management.core.exceptions.ExecutionFailed: Execution of '/usr/bigtop/current/hue/build/env/bin/hue syncdb --noinput' returned 1. Traceback (most recent call last):

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/db/backends/mysql/base.py", line 15, in <module>

import MySQLdb as Database

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/MySQLdb/__init__.py", line 17, in <module>

from . import _mysql

ImportError: libmariadb.so.3: cannot open shared object file: No such file or directory

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/bigtop/current/hue/build/env/bin/hue", line 33, in <module>

sys.exit(load_entry_point('desktop', 'console_scripts', 'hue')())

File "/usr/bigtop/current/hue/desktop/core/src/desktop/manage_entry.py", line 239, in entry

raise e

File "/usr/bigtop/current/hue/desktop/core/src/desktop/manage_entry.py", line 233, in entry

execute_from_command_line(sys.argv)

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/core/management/__init__.py", line 419, in execute_from_command_line

utility.execute()

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/core/management/__init__.py", line 395, in execute

django.setup()

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/__init__.py", line 24, in setup

apps.populate(settings.INSTALLED_APPS)

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/apps/registry.py", line 114, in populate

app_config.import_models()

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/apps/config.py", line 301, in import_models

self.models_module = import_module(models_module_name)

File "/usr/bigtop/current/hue/build/env/lib/python3.8/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1014, in _gcd_import

File "<frozen importlib._bootstrap>", line 991, in _find_and_load

File "<frozen importlib._bootstrap>", line 975, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 671, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 843, in exec_module

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/contrib/auth/models.py", line 3, in <module>

from django.contrib.auth.base_user import AbstractBaseUser, BaseUserManager

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/contrib/auth/base_user.py", line 48, in <module>

class AbstractBaseUser(models.Model):

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/db/models/base.py", line 122, in __new__

new_class.add_to_class('_meta', Options(meta, app_label))

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/db/models/base.py", line 326, in add_to_class

value.contribute_to_class(cls, name)

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/db/models/options.py", line 207, in contribute_to_class

self.db_table = truncate_name(self.db_table, connection.ops.max_name_length())

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/utils/connection.py", line 15, in __getattr__

return getattr(self._connections[self._alias], item)

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/utils/connection.py", line 62, in __getitem__

conn = self.create_connection(alias)

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/db/utils.py", line 204, in create_connection

backend = load_backend(db['ENGINE'])

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/db/utils.py", line 111, in load_backend

return import_module('%s.base' % backend_name)

File "/usr/bigtop/current/hue/build/env/lib/python3.8/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "/usr/bigtop/current/hue/build/env/lib/python3.8/site-packages/django/db/backends/mysql/base.py", line 17, in <module>

raise ImproperlyConfigured(

django.core.exceptions.ImproperlyConfigured: Error loading MySQLdb module.

Did you install mysqlclient?

stdout:

2025-12-30 10:32:51,984 - Stack Feature Version Info: Cluster Stack=3.2.0, Command Stack=None, Command Version=None -> 3.2.0

2025-12-30 10:32:51,985 - Using hadoop conf dir: /etc/hadoop/conf

2025-12-30 10:32:51,985 - Skipping param: datanode_max_locked_memory, due to Configuration parameter 'dfs.datanode.max.locked.memory' was not found in configurations dictionary!

2025-12-30 10:32:51,986 - Skipping param: falcon_user, due to Configuration parameter 'falcon-env' was not found in configurations dictionary!

2025-12-30 10:32:51,986 - Skipping param: gmetad_user, due to Configuration parameter 'ganglia-env' was not found in configurations dictionary!

2025-12-30 10:32:51,986 - Skipping param: gmond_user, due to Configuration parameter 'ganglia-env' was not found in configurations dictionary!

2025-12-30 10:32:51,986 - Skipping param: nfsgateway_heapsize, due to Configuration parameter 'nfsgateway_heapsize' was not found in configurations dictionary!

2025-12-30 10:32:51,986 - Skipping param: oozie_user, due to Configuration parameter 'oozie-env' was not found in configurations dictionary!

2025-12-30 10:32:51,986 - Skipping param: repo_info, due to Configuration parameter 'repoInfo' was not found in configurations dictionary!

2025-12-30 10:32:51,986 - Group['flink'] {}

2025-12-30 10:32:51,986 - Group['spark'] {}

2025-12-30 10:32:51,987 - Group['ranger'] {}

2025-12-30 10:32:51,987 - Group['hdfs'] {}

2025-12-30 10:32:51,987 - Group['hue'] {}

2025-12-30 10:32:51,987 - Group['zeppelin'] {}

2025-12-30 10:32:51,987 - Group['hadoop'] {}

2025-12-30 10:32:51,987 - User['hive'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,989 - User['zookeeper'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,990 - User['atlas'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,991 - User['ams'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,991 - User['ranger'] {'uid': None, 'gid': 'hadoop', 'groups': ['ranger', 'hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,992 - User['tez'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,993 - User['zeppelin'] {'uid': None, 'gid': 'hadoop', 'groups': ['zeppelin', 'hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,993 - User['impala'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,994 - User['flink'] {'uid': None, 'gid': 'hadoop', 'groups': ['flink', 'hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,995 - User['paimon'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,995 - User['spark'] {'uid': None, 'gid': 'hadoop', 'groups': ['spark', 'hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,996 - User['ambari-qa'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,997 - User['solr'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,997 - User['kafka'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,998 - User['hdfs'] {'uid': None, 'gid': 'hadoop', 'groups': ['hdfs', 'hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,998 - User['hue'] {'uid': None, 'gid': 'hadoop', 'groups': ['hue', 'hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:51,999 - User['sqoop'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:52,000 - User['yarn'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:52,000 - User['mapred'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:52,001 - User['hbase'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:52,002 - User['hcat'] {'uid': None, 'gid': 'hadoop', 'groups': ['hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:52,002 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0o555}

2025-12-30 10:32:52,003 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2025-12-30 10:32:52,007 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2025-12-30 10:32:52,008 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'mode': 0o775, 'create_parents': True, 'cd_access': 'a'}

2025-12-30 10:32:52,008 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0o555}

2025-12-30 10:32:52,009 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0o555}

2025-12-30 10:32:52,009 - call['/var/lib/ambari-agent/tmp/changeUid.sh hbase'] {}

2025-12-30 10:32:52,014 - call returned (0, '1025')

2025-12-30 10:32:52,015 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1025'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2025-12-30 10:32:52,018 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1025'] due to not_if

2025-12-30 10:32:52,018 - User['hdfs'] {'fetch_nonlocal_groups': True}

2025-12-30 10:32:52,019 - User['hdfs'] {'groups': ['hdfs', 'hadoop'], 'fetch_nonlocal_groups': True}

2025-12-30 10:32:52,019 - FS Type: HDFS

2025-12-30 10:32:52,020 - Directory['/etc/hadoop'] {'mode': 0o755}

2025-12-30 10:32:52,029 - File['/etc/hadoop/conf/hadoop-env.sh'] {'owner': 'root', 'group': 'hadoop', 'content': InlineTemplate(...)}

2025-12-30 10:32:52,029 - Writing File['/etc/hadoop/conf/hadoop-env.sh'] because contents don't match

2025-12-30 10:32:52,029 - Moving /tmp/tmp1767061972.029608_199 to /etc/hadoop/conf/hadoop-env.sh

2025-12-30 10:32:52,032 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0o1777}

2025-12-30 10:32:52,053 - Skipping param: gmetad_user, due to Configuration parameter 'ganglia-env' was not found in configurations dictionary!

2025-12-30 10:32:52,054 - Skipping param: gmond_user, due to Configuration parameter 'ganglia-env' was not found in configurations dictionary!

2025-12-30 10:32:52,054 - Skipping param: repo_info, due to Configuration parameter 'repoInfo' was not found in configurations dictionary!

2025-12-30 10:32:52,054 - Repository['BIGTOP-3.2.0-repo-2'] {'action': ['prepare'], 'base_url': 'http://10.6.37.126:8000', 'mirror_list': '', 'repo_file_name': 'ambari-bigtop-2', 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}\nmirrorlist={{mirror_list}}{% else %}\nbaseurl={{base_url}}\n{% endif %}\npath=/\nenabled=1\ngpgcheck=0', 'components': ['bigtop', 'main']}

2025-12-30 10:32:52,058 - Repository[None] {'action': ['create']}

2025-12-30 10:32:52,059 - File['/tmp/tmpbnjiibb2'] {'content': b'[BIGTOP-3.2.0-repo-2]\nname=BIGTOP-3.2.0-repo-2\n\nbaseurl=http://10.6.37.126:8000\n\npath=/\nenabled=1\ngpgcheck=0', 'owner': 'root'}

2025-12-30 10:32:52,059 - Writing File['/tmp/tmpbnjiibb2'] because contents don't match

2025-12-30 10:32:52,059 - Changing group for /tmp/tmp1767061972.0597453_294 from 1013 to root

2025-12-30 10:32:52,059 - Moving /tmp/tmp1767061972.0597453_294 to /tmp/tmpbnjiibb2

2025-12-30 10:32:52,063 - File['/tmp/tmptt1q9275'] {'content': StaticFile('/etc/yum.repos.d/ambari-bigtop-2.repo'), 'owner': 'root'}

2025-12-30 10:32:52,063 - Writing File['/tmp/tmptt1q9275'] because contents don't match

2025-12-30 10:32:52,063 - Changing group for /tmp/tmp1767061972.0633075_689 from 1013 to root

2025-12-30 10:32:52,063 - Moving /tmp/tmp1767061972.0633075_689 to /tmp/tmptt1q9275

2025-12-30 10:32:52,067 - Package['unzip'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2025-12-30 10:32:52,634 - Skipping installation of existing package unzip

2025-12-30 10:32:52,634 - Package['curl'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2025-12-30 10:32:52,890 - Skipping installation of existing package curl

2025-12-30 10:32:52,890 - Package['bigtop-select'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2025-12-30 10:32:52,971 - Skipping installation of existing package bigtop-select

2025-12-30 10:32:52,973 - The repository with version 3.2.0 for this command has been marked as resolved. It will be used to report the version of the component which was installed

2025-12-30 10:32:52,976 - Skipping stack-select on HUE because it does not exist in the stack-select package structure.

2025-12-30 10:32:53,212 - config['clusterHostInfo']: {'paimon_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'kerberos_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'atlas_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'solr_server_hosts': ['test-p05', 'test-p04', 'test-p02'], 'namenode_hosts': ['test-p01', 'test-p02'], 'spark_jobhistoryserver_hosts': ['test-p01'], 'flink_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'spark_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'hbase_master_hosts': ['test-p05', 'test-p01', 'test-p02'], 'metrics_monitor_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'nodemanager_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p02'], 'ranger_usersync_hosts': ['test-p02'], 'atlas_server_hosts': ['test-p02'], 'zookeeper_server_hosts': ['test-p03', 'test-p01', 'test-p02'], 'yarn_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'journalnode_hosts': ['test-p05', 'test-p04', 'test-p02'], 'zookeeper_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'hbase_regionserver_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p02'], 'impala_daemon_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p02'], 'resourcemanager_hosts': ['test-p01', 'test-p02'], 'hive_metastore_hosts': ['test-p01', 'test-p02'], 'zkfc_hosts': ['test-p01', 'test-p02'], 'mapreduce2_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'tez_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'ranger_admin_hosts': ['test-p05', 'test-p04', 'test-p02'], 'hive_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'hdfs_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'kafka_broker_hosts': ['test-p05', 'test-p04', 'test-p02'], 'zeppelin_server_hosts': ['test-p01'], 'impala_catalog_hosts': ['test-p01'], 'flink_historyserver_hosts': ['test-p01'], 'impala_state_store_hosts': ['test-p01'], 'hive_server_hosts': ['test-p01', 'test-p02'], 'ranger_tagsync_hosts': ['test-p05'], 'sqoop_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'spark_thriftserver_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'hcat_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'metrics_collector_hosts': ['test-p03'], 'metrics_grafana_hosts': ['test-p01'], 'webhcat_server_hosts': ['test-p01'], 'hbase_client_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'hbase_thriftserver_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'datanode_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p02'], 'historyserver_hosts': ['test-p02'], 'hue_server_hosts': ['test-p01', 'test-p02'], 'all_hosts': ['test-p05', 'test-p04', 'test-p03', 'test-p01', 'test-p02'], 'all_racks': ['/default-rack', '/default-rack', '/default-rack', '/default-rack', '/default-rack'], 'all_ipv4_ips': ['10.6.37.118', '10.6.37.117', '10.6.37.116', '10.6.37.126', '10.6.37.115']}

2025-12-30 10:32:53,215 - Using hadoop conf dir: /etc/hadoop/conf

2025-12-30 10:32:53,221 - Command repositories: BIGTOP-3.2.0-repo-2

2025-12-30 10:32:53,221 - Applicable repositories: BIGTOP-3.2.0-repo-2

2025-12-30 10:32:53,221 - Looking for matching packages in the following repositories: BIGTOP-3.2.0-repo-2

2025-12-30 10:32:54,356 - Adding fallback repositories: BIGTOP-3.2.0-repo-1

2025-12-30 10:32:55,489 - Package['hue_3_2_0'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2025-12-30 10:32:55,956 - Skipping installation of existing package hue_3_2_0

2025-12-30 10:32:55,957 - Execute['python /usr/lib/bigtop-select/distro-select set hue 3.2.0'] {}

2025-12-30 10:32:55,985 - Directory['/var/log/hue'] {'owner': 'hue', 'create_parents': True, 'group': 'hue', 'mode': 0o775}

2025-12-30 10:32:55,985 - Directory['/var/run/hue'] {'owner': 'hue', 'create_parents': True, 'group': 'hadoop', 'mode': 0o775}

2025-12-30 10:32:55,998 - File['/usr/bigtop/current/hue/desktop/conf/hue.ini'] {'owner': 'hue', 'group': 'hue', 'mode': 0o755, 'content': InlineTemplate(...)}

2025-12-30 10:32:55,998 - Writing File['/usr/bigtop/current/hue/desktop/conf/hue.ini'] because contents don't match

2025-12-30 10:32:55,998 - Changing owner for /tmp/tmp1767061975.9987986_781 from 0 to hue

2025-12-30 10:32:55,998 - Changing group for /tmp/tmp1767061975.9987986_781 from 1013 to hue

2025-12-30 10:32:55,999 - Changing permission for /tmp/tmp1767061975.9987986_781 from 644 to 755

2025-12-30 10:32:55,999 - Moving /tmp/tmp1767061975.9987986_781 to /usr/bigtop/current/hue/desktop/conf/hue.ini

2025-12-30 10:32:56,003 - File['/usr/bigtop/current/hue/desktop/conf/log.conf'] {'owner': 'hue', 'group': 'hue', 'mode': 0o755, 'content': InlineTemplate(...)}

2025-12-30 10:32:56,004 - Writing File['/usr/bigtop/current/hue/desktop/conf/log.conf'] because contents don't match

2025-12-30 10:32:56,004 - Changing owner for /tmp/tmp1767061976.0041268_925 from 0 to hue

2025-12-30 10:32:56,004 - Changing group for /tmp/tmp1767061976.0041268_925 from 1013 to hue

2025-12-30 10:32:56,004 - Changing permission for /tmp/tmp1767061976.0041268_925 from 644 to 755

2025-12-30 10:32:56,004 - Moving /tmp/tmp1767061976.0041268_925 to /usr/bigtop/current/hue/desktop/conf/log.conf

2025-12-30 10:32:56,008 - File['/usr/bigtop/current/hue/desktop/conf/log4j.properties'] {'owner': 'hue', 'group': 'hue', 'mode': 0o755, 'content': InlineTemplate(...)}

2025-12-30 10:32:56,009 - Writing File['/usr/bigtop/current/hue/desktop/conf/log4j.properties'] because contents don't match

2025-12-30 10:32:56,009 - Changing owner for /tmp/tmp1767061976.0092728_950 from 0 to hue

2025-12-30 10:32:56,009 - Changing group for /tmp/tmp1767061976.0092728_950 from 1013 to hue

2025-12-30 10:32:56,009 - Changing permission for /tmp/tmp1767061976.0092728_950 from 644 to 755

2025-12-30 10:32:56,009 - Moving /tmp/tmp1767061976.0092728_950 to /usr/bigtop/current/hue/desktop/conf/log4j.properties

2025-12-30 10:32:56,013 - Execute['/usr/bigtop/current/hue/build/env/bin/hue syncdb --noinput'] {'environment': {'JAVA_HOME': '/usr/jdk64/jdk17', 'HADOOP_CONF_DIR': '/etc/hadoop/conf', 'LD_LIBRARY_PATH': '$LD_LIBRARY_PATH:/usr/hdp/current/hue/lib-native'}, 'user': 'hue'}

2025-12-30 10:32:56,527 - The repository with version 3.2.0 for this command has been marked as resolved. It will be used to report the version of the component which was installed

2025-12-30 10:32:56,530 - Skipping stack-select on HUE because it does not exist in the stack-select package structure.

Command failed after 1 tries

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

说明

Django 的提示信息具有误导性,实际并非缺少 Python 包,而是 C 扩展在加载阶段失败。

# 三、错误定位:mysqlclient 并非“纯 Python 包”

# 一)Ambari 实际执行了什么?

从执行栈可以明确看到失败命令:

/usr/bigtop/current/hue/build/env/bin/hue syncdb --noinput

1

这意味着:

- Hue 运行在 独立的 Python venv

- Django 在初始化数据库连接

- 使用的是

mysqlclient(即MySQLdb)

# 二)mysqlclient 的真实依赖关系

mysqlclient 是一个 C 扩展模块,在 import 阶段会链接系统动态库:

| 组件 | 说明 |

|---|---|

| mysqlclient | Python DB API 接口 |

| libmariadb.so.3 | MariaDB Connector C 提供 |

| 提供包 | mariadb-connector-c |

一旦系统中缺失 libmariadb.so.3,就会直接触发 ImportError。

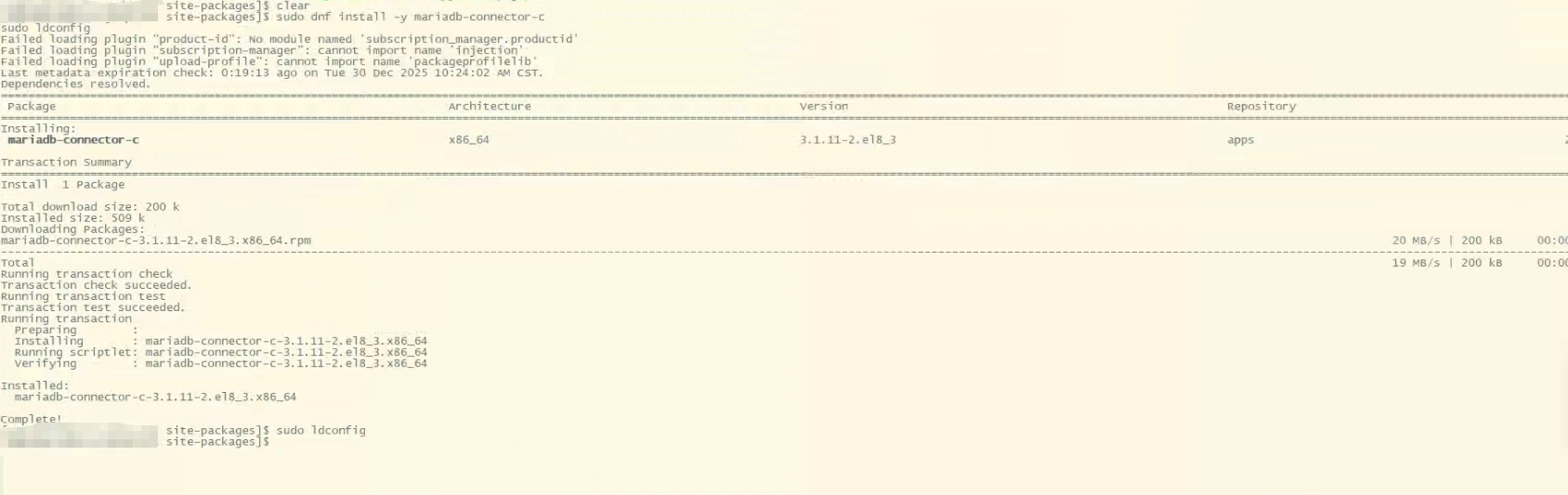

# 四、解决办法

修复方式非常直接:

sudo dnf install -y mariadb-connector-c

sudo ldconfig

1

2

2

必须执行 ldconfig

安装 rpm 仅会将 so 文件放入系统目录,ldconfig 用于刷新动态链接缓存,否则 Python venv 仍可能无法解析新库。

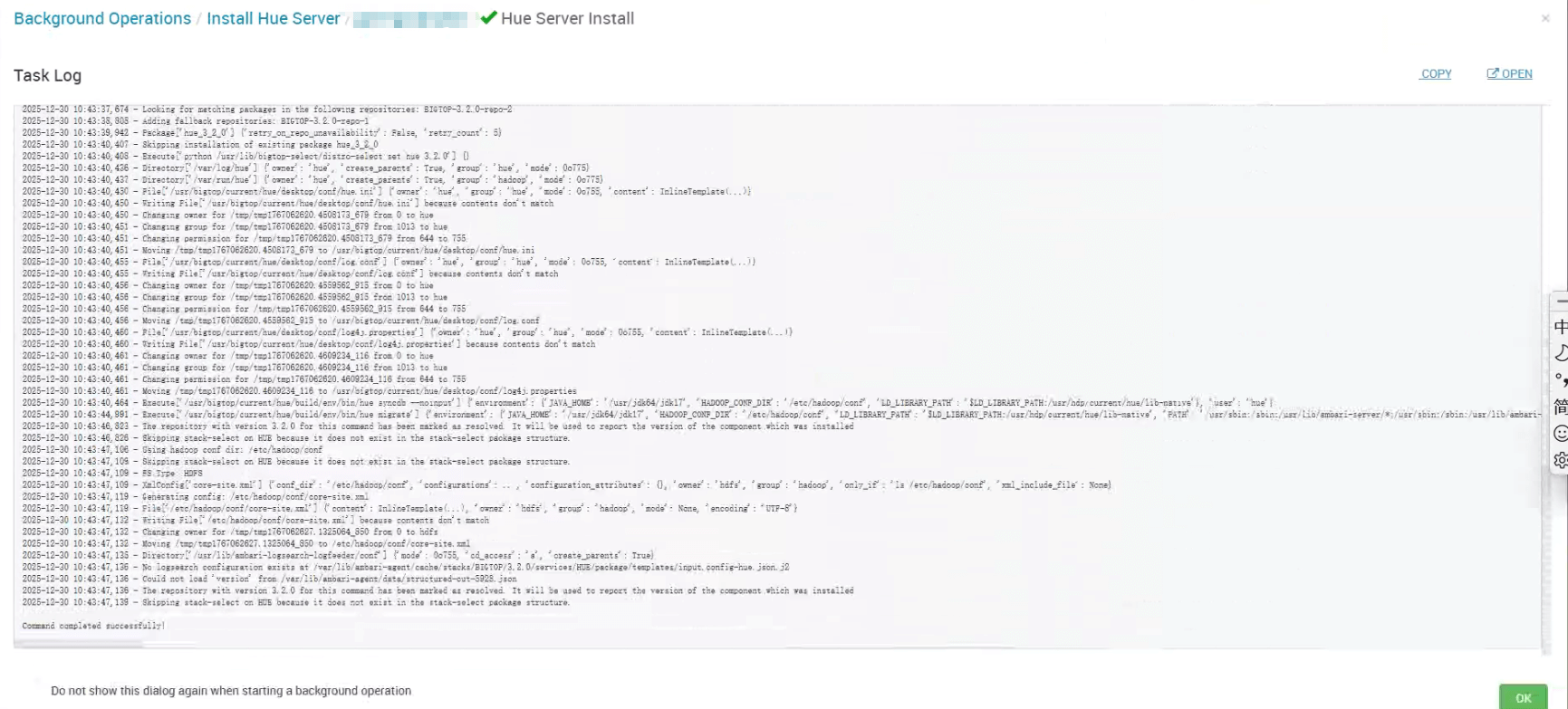

图示要点

动态库依赖可被正常解析后,hue syncdb 可以完整执行,Hue Server 随后可正常启动。

# 五、解决方案(EL8 系列通用)

标准处理方式

在 Hue Server 所在节点执行:

sudo dnf install -y mariadb-connector-c

sudo ldconfig

1

2

2

- 03

- Ranger Admin 证书快速导入脚本02-15