[开启Kerberos]-Atlas启动-Hbase权限异常

[开启Kerberos]-Atlas启动-Hbase权限异常

# 一、问题背景

ttr-2.2.1 以上版本已修复

在这些版本中,当开启 Kerberos 后,Atlas 启动可能因为 HBase 权限不足 而失败。 高版本已修复该问题,无需额外操作。 如在部署、二开或测试中遇到类似问题,可咨询小饕 (opens new window)

Atlas 在启用 Kerberos 后启动失败,日志中最明显的报错来自 org.apache.hadoop.hbase.security.AccessDeniedException。

# 二、错误日志分析

启动 Atlas 时控制台输出如下堆栈信息:

at org.eclipse.jetty.webapp.WebAppContext.doStart(WebAppContext.java:524)

at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:73)

at org.eclipse.jetty.util.component.ContainerLifeCycle.start(ContainerLifeCycle.java:169)

at org.eclipse.jetty.server.Server.start(Server.java:423)

at org.eclipse.jetty.util.component.ContainerLifeCycle.doStart(ContainerLifeCycle.java:110)

at org.eclipse.jetty.server.handler.AbstractHandler.doStart(AbstractHandler.java:97)

at org.eclipse.jetty.server.Server.doStart(Server.java:387)

at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:73)

at org.apache.atlas.web.service.EmbeddedServer.start(EmbeddedServer.java:111)

at org.apache.atlas.Atlas.main(Atlas.java:133)

Caused by: org.janusgraph.diskstorage.TemporaryBackendException: Temporary failure in storage backend

at org.janusgraph.diskstorage.hbase.HBaseStoreManager.ensureTableExists(HBaseStoreManager.java:777)

at org.janusgraph.diskstorage.hbase.HBaseStoreManager.getLocalKeyPartition(HBaseStoreManager.java:559)

at org.janusgraph.diskstorage.hbase.HBaseStoreManager.getDeployment(HBaseStoreManager.java:345)

... 98 common frames omitted

Caused by: org.apache.hadoop.hbase.security.AccessDeniedException: org.apache.hadoop.hbase.security.AccessDeniedException: Insufficient permissions (user=atlas/dev2@TTBIGDATA.COM, scope=default:atlas_janus, params=[table=default:atlas_janus,],action=CREATE)

at org.apache.hadoop.hbase.security.access.AccessChecker.requirePermission(AccessChecker.java:273)

at org.apache.hadoop.hbase.security.access.AccessController.requirePermission(AccessController.java:350)

at org.apache.hadoop.hbase.security.access.AccessController.preGetTableDescriptors(AccessController.java:2112)

at org.apache.hadoop.hbase.master.MasterCoprocessorHost$86.call(MasterCoprocessorHost.java:1122)

at org.apache.hadoop.hbase.master.MasterCoprocessorHost$86.call(MasterCoprocessorHost.java:1119)

at org.apache.hadoop.hbase.coprocessor.CoprocessorHost$ObserverOperationWithoutResult.callObserver(CoprocessorHost.java:558)

at org.apache.hadoop.hbase.coprocessor.CoprocessorHost.execOperation(CoprocessorHost.java:631)

at org.apache.hadoop.hbase.master.MasterCoprocessorHost.preGetTableDescriptors(MasterCoprocessorHost.java:1119)

at org.apache.hadoop.hbase.master.HMaster.listTableDescriptors(HMaster.java:3223)

at org.apache.hadoop.hbase.master.MasterRpcServices.getTableDescriptors(MasterRpcServices.java:1088)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:384)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:131)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:371)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:351)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.instantiateException(RemoteWithExtrasException.java:110)

at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.unwrapRemoteException(RemoteWithExtrasException.java:100)

at org.apache.hadoop.hbase.shaded.protobuf.ProtobufUtil.makeIOExceptionOfException(ProtobufUtil.java:370)

at org.apache.hadoop.hbase.shaded.protobuf.ProtobufUtil.handleRemoteException(ProtobufUtil.java:358)

at org.apache.hadoop.hbase.client.MasterCallable.call(MasterCallable.java:101)

at org.apache.hadoop.hbase.client.RpcRetryingCallerImpl.callWithRetries(RpcRetryingCallerImpl.java:103)

at org.apache.hadoop.hbase.client.HBaseAdmin.executeCallable(HBaseAdmin.java:3019)

at org.apache.hadoop.hbase.client.HBaseAdmin.getTableDescriptor(HBaseAdmin.java:578)

at org.apache.hadoop.hbase.client.HBaseAdmin.getDescriptor(HBaseAdmin.java:362)

at org.janusgraph.diskstorage.hbase.HBaseStoreManager.ensureTableExists(HBaseStoreManager.java:755)

... 100 common frames omitted

Caused by: org.apache.hadoop.hbase.ipc.RemoteWithExtrasException: org.apache.hadoop.hbase.security.AccessDeniedException: Insufficient permissions (user=atlas/dev2@TTBIGDATA.COM, scope=default:atlas_janus, params=[table=default:atlas_janus,],action=CREATE)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

这表明 Atlas 在初始化 HBase 表 default:atlas_janus 时 没有 CREATE 权限。

关键错误:

Insufficient permissions (user=atlas/dev2@TTBIGDATA.COM, scope=default:atlas_janus, action=CREATE)

1

# 三、验证 Atlas Keytab 身份

我们先验证 atlas 主体的 Keytab 是否能正常使用。

[root@dev2 atlas]# kinit -kt /etc/security/keytabs/atlas.service.keytab atlas/dev2@TTBIGDATA.COM

[root@dev2 atlas]# hbase shell -n <<<'whoami'

hbase:001:0> whoami

atlas/dev2@TTBIGDATA.COM (auth:KERBEROS)

groups: hadoop

Took 0.0459 seconds

1

2

3

4

5

6

2

3

4

5

6

验证结果

可以正常执行 whoami,说明 Kerberos 票据有效,atlas 身份在 HBase 层被识别。

# 四、赋予 HBase 权限

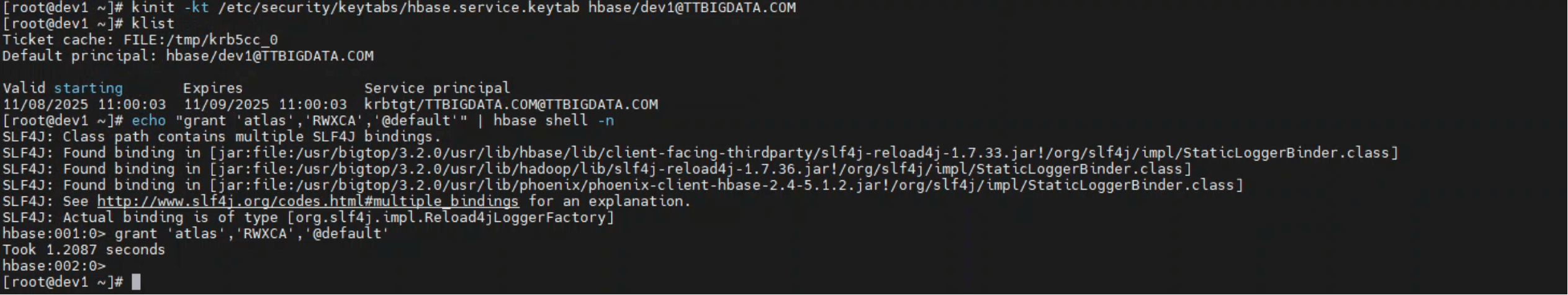

atlas 用户虽然能够认证,但 未被授予表级操作权限。 此时我们需要使用 hbase 管理员主体来授权。

- 切换至 HBase 管理员

kinit -kt /etc/security/keytabs/hbase.service.keytab hbase/dev1@TTBIGDATA.COM

1

验证登录:

[root@dev1 ~]# kinit -kt /etc/security/keytabs/hbase.service.keytab hbase/dev1@TTBIGDATA.COM

[root@dev1 ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: hbase/dev1@TTBIGDATA.COM

Valid starting Expires Service principal

11/08/2025 11:00:03 11/09/2025 11:00:03 krbtgt/TTBIGDATA.COM@TTBIGDATA.COM

1

2

3

4

5

6

7

2

3

4

5

6

7

- 执行授权命令

echo "grant 'atlas','RWXCA','@default'" | hbase shell -n

1

执行输出:

[root@dev1 ~]# echo "grant 'atlas','RWXCA','@default'" | hbase shell -n

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/bigtop/3.2.0/usr/lib/hbase/lib/client-facing-thirdparty/slf4j-reload4j-1.7.33.jar!/org/slf4j/impl/StaticLoggerBinder.

SLF4J: Found binding in [jar:file:/usr/bigtop/3.2.0/usr/lib/hadoop/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/bigtop/3.2.0/usr/lib/phoenix/phoenix-client-hbase-2.4-5.1.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

hbase:001:0> grant 'atlas','RWXCA','@default'

Took 1.2087 seconds

hbase:002:0>

[root@dev1 ~]#

1

2

3

4

5

6

7

8

9

10

11

12

2

3

4

5

6

7

8

9

10

11

12

| 权限位 | 含义 |

|---|---|

| R | Read |

| W | Write |

| X | Execute |

| C | Create |

| A | Admin |

授权完整组合命令

kinit -kt /etc/security/keytabs/hbase.service.keytab hbase/dev1@TTBIGDATA.COM

echo "grant 'atlas','RWXCA','@default'" | hbase shell -n

1

2

2

再次启动,此时日志中不再出现 AccessDeniedException,Atlas 成功连接 HBase 并创建表。

- 03

- Ranger Admin 证书快速导入脚本02-15