[开启Kerberos]-Trino启动-连接Hive失败

[开启Kerberos]-Trino启动-连接Hive失败

导读

- 场景:Trino 在 Kerberos 环境中已成功启动,但访问 Hive Catalog 失败;

- 表现:Hive Metastore 日志中出现 SASL negotiation failure / Checksum failed;

- 根因:

hive.metastore.service.principal与 Hive Metastore 实际 Principal 不一致; - 解决:修正

hive.properties配置 + 校验 Trino keytab + 使用 trino-cli 做端到端验证。

# 一、报错现象:Trino 访问 Hive 失败

Trino 在 Kerberos 环境下已经成功启动,但在访问 Hive Catalog(例如执行 show tables)时,Hive Metastore 端打印出如下错误日志:

2025-11-11T11:20:15,420 ERROR [pool-9-thread-61]: transport.TSaslTransport (TSaslTransport.java:open(315)) - SASL negotiation failure

javax.security.sasl.SaslException: GSS initiate failed

at com.sun.security.sasl.gsskerb.GssKrb5Server.evaluateResponse(GssKrb5Server.java:199) ~[?:1.8.0_202]

at org.apache.thrift.transport.TSaslTransport$SaslParticipant.evaluateChallengeOrResponse(TSaslTransport.java:539) ~[hive-exec-3.1.3.jar:3.1.3]

at org.apache.thrift.transport.TSaslTransport.open(TSaslTransport.java:283) [hive-exec-3.1.3.jar:3.1.3]

at org.apache.thrift.transport.TSaslServerTransport.open(TSaslServerTransport.java:41) [hive-exec-3.1.3.jar:3.1.3]

at org.apache.thrift.transport.TSaslServerTransport$Factory.getTransport(TSaslServerTransport.java:216) [hive-exec-3.1.3.jar:3.1.3]

at org.apache.hadoop.hive.metastore.security.HadoopThriftAuthBridge$Server$TUGIAssumingTransportFactory$1.run(HadoopThriftAuthBridge.java:694) [hive-exec-3.1.3.jar:3.1.3]

at org.apache.hadoop.hive.metastore.security.HadoopThriftAuthBridge$Server$TUGIAssumingTransportFactory$1.run(HadoopThriftAuthBridge.java:691) [hive-exec-3.1.3.jar:3.1.3]

at java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_202]

at javax.security.auth.Subject.doAs(Subject.java:360) [?:1.8.0_202]

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1855) [hadoop-common-3.3.4.jar:?]

at org.apache.hadoop.hive.metastore.security.HadoopThriftAuthBridge$Server$TUGIAssumingTransportFactory.getTransport(HadoopThriftAuthBridge.java:691) [hive-exec-3.1.3.jar:3.1.3]

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:269) [hive-exec-3.1.3.jar:3.1.3]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_202]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_202]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_202]

Caused by: org.ietf.jgss.GSSException: Failure unspecified at GSS-API level (Mechanism level: Checksum failed)

at sun.security.jgss.krb5.Krb5Context.acceptSecContext(Krb5Context.java:856) ~[?:1.8.0_202]

at sun.security.jgss.GSSContextImpl.acceptSecContext(GSSContextImpl.java:342) ~[?:1.8.0_202]

at sun.security.jgss.GSSContextImpl.acceptSecContext(GSSContextImpl.java:285) ~[?:1.8.0_202]

at com.sun.security.sasl.gsskerb.GssKrb5Server.evaluateResponse(GssKrb5Server.java:167) ~[?:1.8.0_202]

... 14 more

Caused by: sun.security.krb5.KrbCryptoException: Checksum failed

at sun.security.krb5.internal.crypto.Aes256CtsHmacSha1EType.decrypt(Aes256CtsHmacSha1EType.java:102) ~[?:1.8.0_202]

at sun.security.krb5.internal.crypto.Aes256CtsHmacSha1EType.decrypt(Aes256CtsHmacSha1EType.java:94) ~[?:1.8.0_202]

at sun.security.krb5.EncryptedData.decrypt(EncryptedData.java:175) ~[?:1.8.0_202]

at sun.security.krb5.KrbApReq.authenticate(KrbApReq.java:281) ~[?:1.8.0_202]

at sun.security.krb5.KrbApReq.<init>(KrbApReq.java:149) ~[?:1.8.0_202]

at sun.security.jgss.krb5.InitSecContextToken.<init>(InitSecContextToken.java:108) ~[?:1.8.0_202]

at sun.security.jgss.krb5.Krb5Context.acceptSecContext(Krb5Context.java:829) ~[?:1.8.0_202]

at sun.security.jgss.GSSContextImpl.acceptSecContext(GSSContextImpl.java:342) ~[?:1.8.0_202]

at sun.security.jgss.GSSContextImpl.acceptSecContext(GSSContextImpl.java:285) ~[?:1.8.0_202]

at com.sun.security.sasl.gsskerb.GssKrb5Server.evaluateResponse(GssKrb5Server.java:167) ~[?:1.8.0_202]

... 14 more

Caused by: java.security.GeneralSecurityException: Checksum failed

at sun.security.krb5.internal.crypto.dk.AesDkCrypto.decryptCTS(AesDkCrypto.java:451) ~[?:1.8.0_202]

at sun.security.krb5.internal.crypto.dk.AesDkCrypto.decrypt(AesDkCrypto.java:272) ~[?:1.8.0_202]

at sun.security.krb5.internal.crypto.Aes256.decrypt(Aes256.java:76) ~[?:1.8.0_202]

at sun.security.krb5.internal.crypto.Aes256CtsHmacSha1EType.decrypt(Aes256CtsHmacSha1EType.java:100) ~[?:1.8.0_202]

at sun.security.krb5.internal.crypto.Aes256CtsHmacSha1EType.decrypt(Aes256CtsHmacSha1EType.java:94) ~[?:1.8.0_202]

at sun.security.krb5.EncryptedData.decrypt(EncryptedData.java:175) ~[?:1.8.0_202]

at sun.security.krb5.KrbApReq.authenticate(KrbApReq.java:281) ~[?:1.8.0_202]

at sun.security.krb5.KrbApReq.<init>(KrbApReq.java:149) ~[?:1.8.0_202]

at sun.security.jgss.krb5.InitSecContextToken.<init>(InitSecContextToken.java:108) ~[?:1.8.0_202]

at sun.security.jgss.krb5.Krb5Context.acceptSecContext(Krb5Context.java:829) ~[?:1.8.0_202]

at sun.security.jgss.GSSContextImpl.acceptSecContext(GSSContextImpl.java:342) ~[?:1.8.0_202]

at sun.security.jgss.GSSContextImpl.acceptSecContext(GSSContextImpl.java:285) ~[?:1.8.0_202]

at com.sun.security.sasl.gsskerb.GssKrb5Server.evaluateResponse(GssKrb5Server.java:167) ~[?:1.8.0_202]

... 14 more

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

现象总结

- Trino 能正常启动;

- 连接 Hive Metastore 时,Metastore 侧抛出

SASL negotiation failure; - GSS 层报

GSS initiate failed; - 底层 Kerberos 加解密逻辑报

Checksum failed。

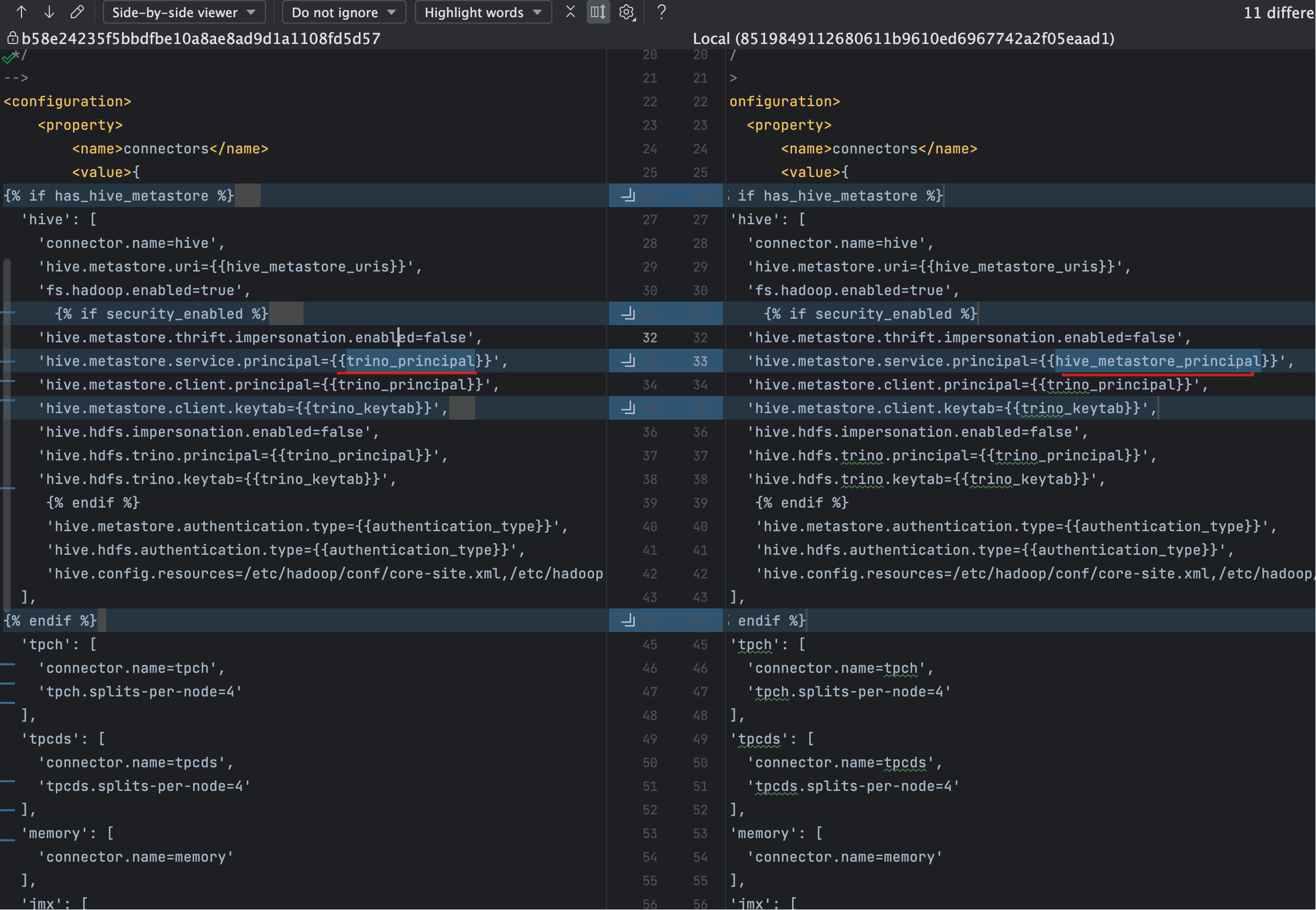

# 二、原因分析:hive.metastore.service.principal 配置问题

从错误堆栈可以看出,问题出现在 Hive Metastore 端对客户端 Kerberos token 的解密阶段,典型根因是 服务端 principal 与客户端预期不一致。

实际排查中,将注意力放在 hive.metastore.service.principal 上:

hive.metastore.service.principal这个值存在问题。 调整为正确的 principal 之后,错误消失。

对应配置截图如下:

关键点

hive.metastore.service.principal 必须与 Hive Metastore 进程实际使用的服务 principal 完全一致(包括主机名 / _HOST

展开结果 / Realm)。

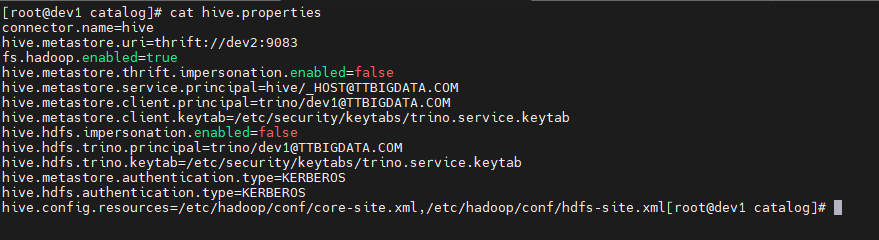

# 三、Trino 侧 hive.properties 配置

Trino 中 Hive Catalog 配置位于 catalog/hive.properties,Trino 启动之后,可以在服务器上查看该文件内容。

[root@dev1 catalog]# cat hive.properties

connector.name=hive

hive.metastore.uri=thrift://dev2:9083

fs.hadoop.enabled=true

hive.metastore.thrift.impersonation.enabled=false

hive.metastore.service.principal=hive/_HOST@TTBIGDATA.COM

hive.metastore.client.principal=trino/dev1@TTBIGDATA.COM

hive.metastore.client.keytab=/etc/security/keytabs/trino.service.keytab

hive.hdfs.impersonation.enabled=false

hive.hdfs.trino.principal=trino/dev1@TTBIGDATA.COM

hive.hdfs.trino.keytab=/etc/security/keytabs/trino.service.keytab

hive.metastore.authentication.type=KERBEROS

hive.hdfs.authentication.type=KERBEROS

hive.config.resources=/etc/hadoop/conf/core-site.xml,/etc/hadoop/conf/hdfs-site.xml[root@dev1 catalog]#

2

3

4

5

6

7

8

9

10

11

12

13

14

可以看到,这里有几项与 Kerberos 直接相关:

| 配置项 | 说明 |

|---|---|

hive.metastore.service.principal | Metastore 服务端 principal,必须与 Hive 侧实际配置一致 |

hive.metastore.client.principal | Trino 访问 Metastore 使用的客户端 principal |

hive.metastore.client.keytab | 对应客户端 principal 的 keytab 路径 |

hive.hdfs.trino.principal / keytab | Trino 访问 HDFS 使用的 principal 与 keytab |

其中 hive.metastore.service.principal 一旦配置错误,就会触发 Metastore 端的 Checksum failed。

`_HOST` 展开说明

hive/_HOST@TTBIGDATA.COM 这种写法会在运行时展开 _HOST 为实际主机 FQDN,例如:

hive/dev2@TTBIGDATA.COM

KDC 中必须存在对应 principal,并且 Metastore 启动时加载的 keytab 内也要包含该 principal。

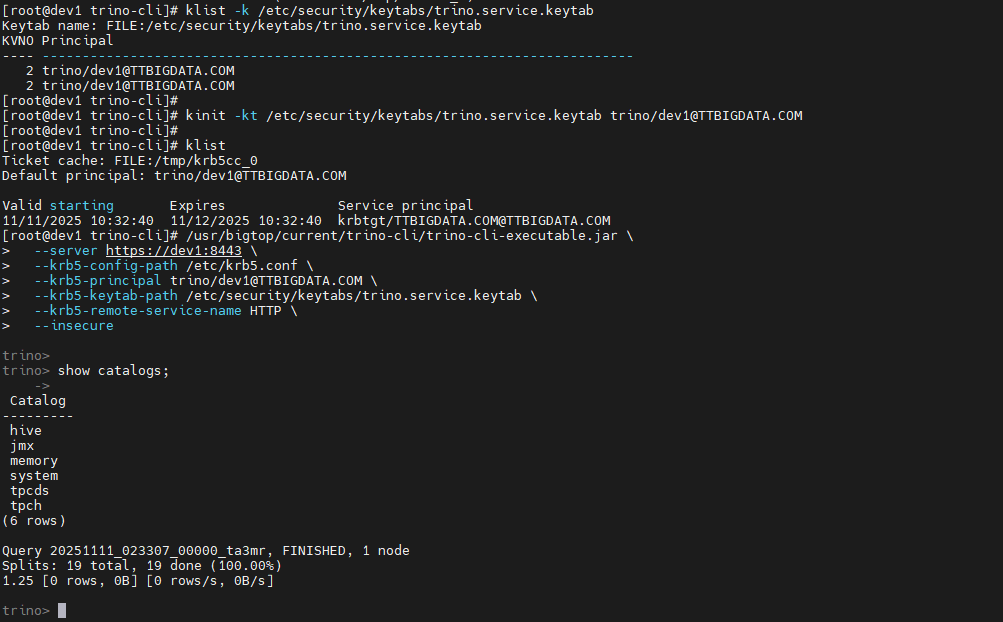

# 四、Trino Kerberos 凭证校验(klist / kinit)

在确认配置之后,再校验 Trino 使用的 keytab 与 principal 是否正常。

# 1、查看 keytab 中的 principal

[root@dev1 trino-cli]# klist -k /etc/security/keytabs/trino.service.keytab

Keytab name: FILE:/etc/security/keytabs/trino.service.keytab

KVNO Principal

---- --------------------------------------------------------------------------

2 trino/dev1@TTBIGDATA.COM

2 trino/dev1@TTBIGDATA.COM

[root@dev1 trino-cli]#

2

3

4

5

6

7

# 2、使用 keytab 获取 Ticket

[root@dev1 trino-cli]# kinit -kt /etc/security/keytabs/trino.service.keytab trino/dev1@TTBIGDATA.COM

[root@dev1 trino-cli]#

[root@dev1 trino-cli]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: trino/dev1@TTBIGDATA.COM

Valid starting Expires Service principal

11/11/2025 10:32:40 11/12/2025 10:32:40 krbtgt/TTBIGDATA.COM@TTBIGDATA.COM

2

3

4

5

6

7

8

校验目标

- keytab 文件是否有效;

- keytab 中 principal 是否与 Trino 配置一致;

- Kerberos 客户端到 KDC 的交互是否正常。

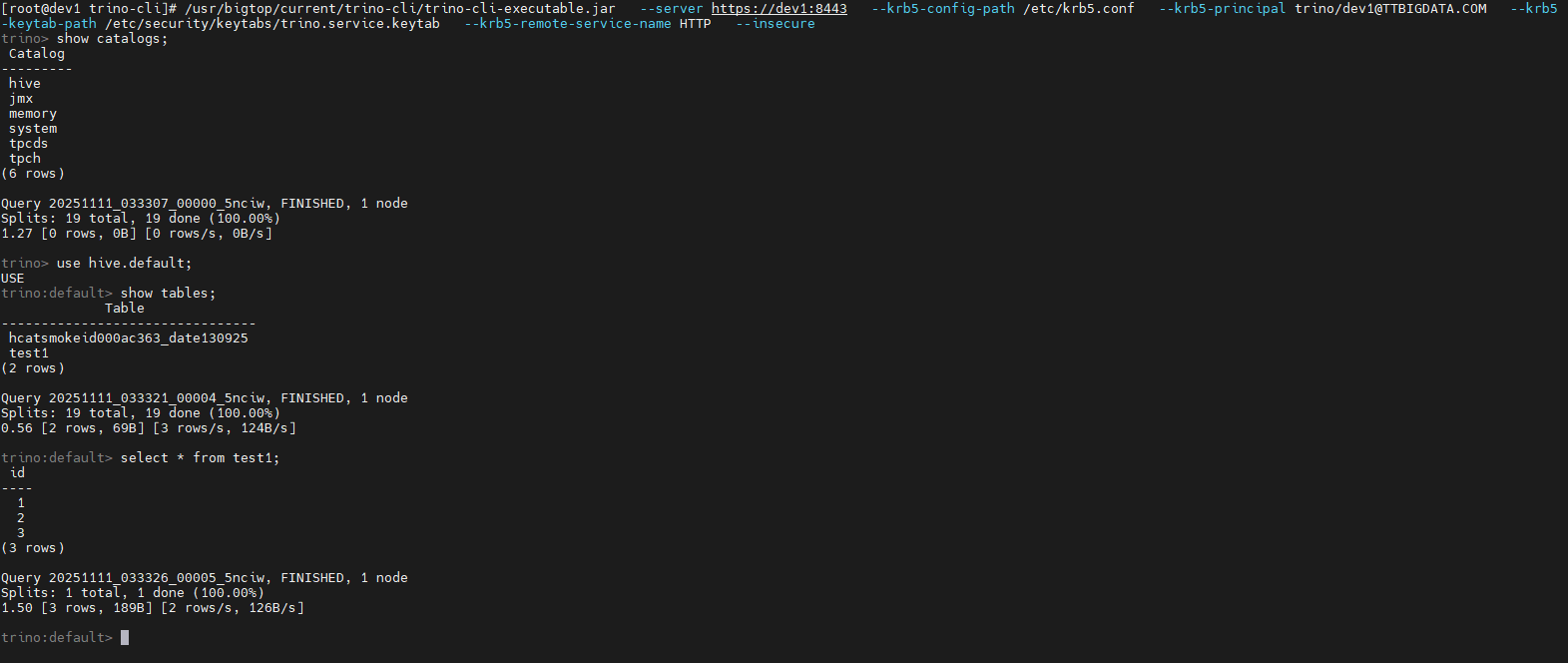

# 五、使用 trino-cli 验证 Trino ↔ Hive 链路

配置与凭证调整完毕后,通过 trino-cli 做一次端到端访问验证。

# 1、环境变量与 kinit

export JAVA_HOME=/opt/modules/jdk-23.0.2+7

export PATH=$JAVA_HOME/bin:$PATH

kinit -kt /etc/security/keytabs/trino.service.keytab trino/dev1@TTBIGDATA.COM

2

3

# 2、启动 trino-cli(带 Kerberos 参数)

/usr/bigtop/current/trino-cli/trino-cli-executable.jar \

--server https://dev1:8443 \

--krb5-config-path /etc/krb5.conf \

--krb5-principal trino/dev1@TTBIGDATA.COM \

--krb5-keytab-path /etc/security/keytabs/trino.service.keytab \

--krb5-remote-service-name HTTP \

--insecure

2

3

4

5

6

7

进入 CLI 之后,首先执行:

trino>

trino> show catalogs;

->

Catalog

---------

hive

jmx

memory

system

tpcds

tpch

(6 rows)

Query 20251111_023307_00000_ta3mr, FINISHED, 1 node

Splits: 19 total, 19 done (100.00%)

1.25 [0 rows, 0B] [0 rows/s, 0B/s]

trino>

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

hive catalog 已正常注册。

接着执行 show tables; 等操作,Hive 表列表返回正常:

至此,Trino 访问 Hive 的链路验证完成。

- 01

- [Step2] Ranger Admin HA 自动化安装 自生成凭证02-04

- 02

- 调用 Ranger API 返回 403 问题02-03

- 03

- [Step1] Haproxy 规划与环境安装 Kylin V1002-02